TECH

Lidarmos: LiDAR-Based Motion Segmentation in Focus

Lidarmos, a stylized abbreviation for LiDAR-MOS (LiDAR-based Moving Object Segmentation), is a cutting-edge technology that plays a pivotal role in autonomous driving and robotics. By analyzing 3D LiDAR data through sequential scans, it enables the real-time distinction between moving objects—such as vehicles, cyclists, and pedestrians—and static environments like buildings, poles, and trees.

The deployment of this approach uses deep learning and temporal data to excel in motion-aware perception, so it would be priceless in such operationally unavoidable operations as collision avoidance, mapping, localization, and navigation. Lidarmos was a derivative of the SemanticKITTI benchmark and has been an impetus to greater innovation with MambaMOS and HeLiMOS further perfecting its precision and flexibility in changing conditions.

Table of Contents

Evolution of Lidarmos

Lidarmos has taken off its journey to solve a form of pressing need in 3D perception: motion-awareness. Conventional point cloud segmentation assumed equal treatment in all objects and was not concerned with variation in time. Lidarmos changed the thought of motion-centric segmentation, which is making the perception process redefine robots and self-driving vehicles.

First, approaches such as the SLAM based on LiDAR would detect objects but would have a poor performance on dynamic scenes due to their inability to segment the motion. Lidarmos removed this gap by matching spatial and temporal information processing to send them through convolutional neural networks to identify movement patterns. This development has enabled dynamic updating of the maps by the systems and responding to unplanned events on the road, e.g., a pedestrian suddenly crossing or a cyclist entering a blind area.

Evolution Timeline

| Era | Methodology | Limitations | Improvements in Lidarmos |

| Pre-2019 | Static segmentation | No distinction between motion | General scene understanding |

| 2019 – Present | SemanticKITTI + CNNs | High computational cost | Real-time segmentation |

| Post-2021 | MambaMOS, HeLiMOS | Enhanced dynamic awareness | Greater accuracy, lower latency |

How It Works

1. Range and Residual Images

The LiDAR data is projected as spherical pictures called range images in which the distance of every dot concerning the sensor is recorded. Residual images also help to detect the movements by comparing successive frames, and the difference shows the change in position.

2. Deep Learning Models

Lidarmos exploits the temporal CNN architectures trained on annotated scenes, as in SemanticKITTI, to separate dynamic vs static labels with high precision. Such models are refined through 3D convolution and cross-frame consistency checks.

3. Motion Segmentation Pipeline

- Pre-processing: Conversion of LiDAR scans into range/residual images.

- Conclusion: Deep networks model time-changing and space-invariant variation.

- Post-processing: Filtration, smoothing, and map priors fusion.

The pipeline is highly reliable in crowded or occluded environments.

User Perspectives

Amit G., Robotics Engineer – Germany:

“Lidarmos helped us reduce dynamic noise in our mobile mapping system. It’s become an integral part of our SLAM pipeline.”

Jia Lee, Researcher – Seoul:

“The segmentation results are precise and consistent. Unlike older models, Lidarmos doesn’t confuse parked vehicles with moving ones. That’s a huge win.”

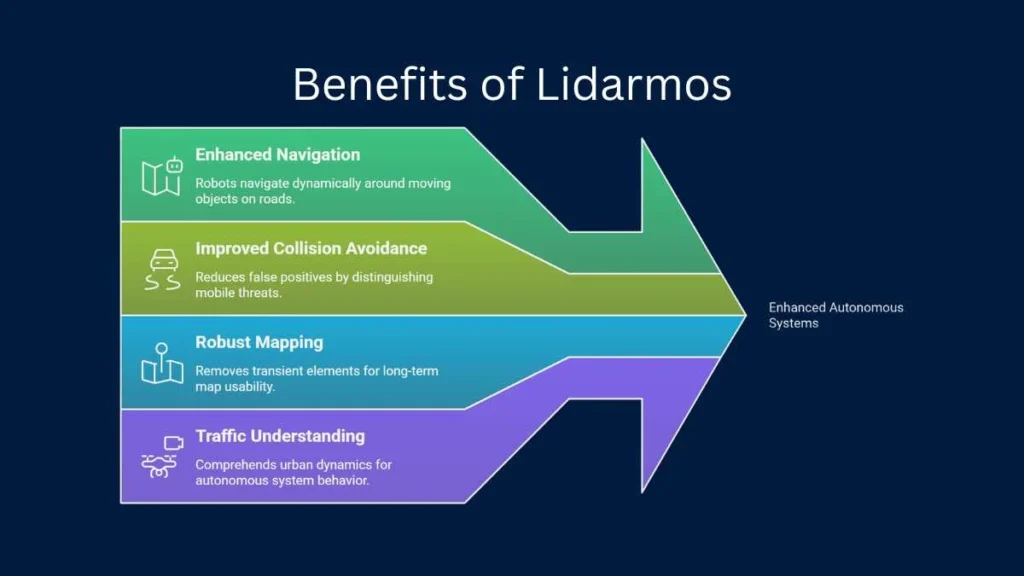

Benefits of Lidarmos

- Enhanced Navigation: Helps robots and cars move dynamically along with moving objects on the road.

- Improved Collision Avoidance: Minimises false positives through the ability to differentiate stationary and mobile threats.

- Robust Mapping: Eliminates short-term features such as people in maps to enhance the long-term user experience.

- Traffic Understanding: Helps self-driving cars understand the actions of the city and adapt themselves to them.

Challenges Faced

Lidarmos does not go without limitations in spite of its benefits. The high computational load because of deep learning in real-time is one of the main problems. The density of 3D point clouds to process in multiple frames requires high-end GPUs and good memory handling. As well, low-reflective surfaces, e.g., black cars or wet roads, may decrease the accuracy of LiDAR, thus sometimes resulting in segmentation failure.

Another problem is to annotate moving objects to form training datasets. Labeling in time-sequenced data is an intensive exercise that may vary due to inconsistencies occurring across the hand labeling. With the rapid development of semi-supervised training and synthetic data generation, such obstacles are fast being overcome.

Future Enhancements

The role of Lidarmos is growing. Such methods as MambaMOS have built-in attention, and HeLiMOS proposes hierarchical learning to enhance scaling. The following stage in this area is the multi-modal fusion, when LiDAR-MOS is combined with the camera and radar signals in order to provide a consistent understanding of the scene.

In addition to that, the hardware of edge AI is becoming more useful in terms of real-time processing of robots and drones that are compact. Ready to go, 5G and edge compute systems may one day run real-time crowd monitors, AI-powered on smart city infrastructure, and even personal AI assistants built into AR glasses using Lidar-like systems.

Conclusion

Lidarmos leads 3D perception, transforming the way machines perceive and act in the world. It is critical to current robotics and autonomous navigation systems, as its combination of LiDAR precision, temporal depth, and deep learning flexibility produces LiLAR, a novel solution needed in the modern world.

With the increasing usage and familiarity of autonomous systems, segmentations of motion detection, such as Lidarmos technologies, will not only increase safety and efficiency but also lay the groundwork for more intelligent, context-differentiating machines, which will become the standard of AI-powered mobility in the future.

-

FRIENDSHIP MESSAGES4 months ago

FRIENDSHIP MESSAGES4 months ago100+ Heart Touching Sorry Messages for Friends

-

ANNIVERSARY WISHES8 months ago

ANNIVERSARY WISHES8 months ago100+ Beautiful Engagement Anniversary Wishes Messages and Quotes

-

BIRTHDAY WISHES7 months ago

BIRTHDAY WISHES7 months ago300+ Happy Birthday Wishes for Brother | Heart Touching Happy Birthday Brother

-

BIRTHDAY WISHES8 months ago

BIRTHDAY WISHES8 months ago200+ Unique Birthday Wishes for Your Best Friend to Impress on Their Big Day